LATENT SPACES_ STEP INSIDE A COMPUTER MODEL_INSTALLATION

A film by Pedro Küster

sound and electronic media artist

projects, exhibitions, artwork

LATENT SPACES_ STEP INSIDE A COMPUTER MODEL_INSTALLATION

A film by Pedro Küster

T1/2 is a sound art project exploring the acoustic memory of the Semipalatinsk Test Site, Kazakstan. It is a collaboration with curator and artist Kamila Narysheva and myself, brought together through the British Council creative producers scheme. Also known as the Polygon, Semipalatinsk was the primary nuclear test site for the Soviet Union, subject to around 450 nuclear tests between 1949 and 1989. The project centres on how sound can capture and convey the intangible echoes of historical trauma both human and ecological. The work was presented as a sound installation at 101 Dump Gallery, Almaty in May 2025.

Collaborating online from Sept 2024 to May 2025, we developed our approach for the piece exploring the shared themes of deep time, technological materiality and transmutation. The process began with an expedition to the Semipalatinsk Test Site in December 2024, where Kamila captured field recordings of soil, water, air, and architectural remnants made using hydrophones, geophones, and contact microphones.

Captured at different levels of strata, key sites for recording included the atomic lake, the abandoned city of Shagan, subterranean bunkers and the environmental wind on the steppe. This created a vast corpus of sound material from which to work and form the narrative for the piece. The recordings included expansive environmental vistas of the sound such as the wind and animal sounds on the steppe – others were industrial, claustrophobic and tense. Despite the prevailing historical narratives of decay and destruction, the sound material offered hope and signs of nature regenerating itself. The personal connection of Kamila to the site (her father is from Semey) came through the material, with focused listening and engagement with the site through sound.

“And within that stillness, there’s a kind of quiet resilience. Grasses push through. Birds nest in old impact sites, horses graze along the road. The land hasn’t forgotten, but it’s learning to hold both damage and recovery at once.” Kamila Narysheva

PROCESS

We developed an T1/2 patch in MaxMSP to deconstruct the recorded material, algorithm that could decay sound material itself, so we developed a Half Life Max MSP patch that deconstructed recorded samples from the Polygon and used these within our piece. A key reference point was Togzhan Kassenova’s Atomic Steppe book, which lists the half lives of the radionuclides found on the Steppe, these include Carbon, Tritium, Strontium. From here we mapped and translated these (often very long) time intervals into more musical temporalities (i.e. number of seconds rather than years) that would take a sample at full fidelity and move to total degradation through an exponential curve.

THE INSTALLATION & PUBLIC PROGRAMME

T1/2 was premiered in the basement gallery of 101 Dump, a former Soviet building in Almaty earlier this year. A stereo set up with low blue light, providing a contemplative space for embodied listening. Alongside was a public programme that invited artists, researchers, and community members, especially those from Semey and nearby regions, to contribute. ASlong themes of nuclear legacy and justice

EDITORIAL AND RECEPTION

Anton Spice wrote a brilliant piece on our work on his Through Sounds substack. You can read ‘Sonic traces of a soviet nuclear test site’ with our interviews.

The 37 minute piece was broadcast in full on Refuge Worldwide, listen here

I was a selected artist on Immersive Assembly 4: Dreams and Echoes, a talent development programme exploring immersive arts and technology led by Mediale, in collaboration with the University of Oxford. The theme, ‘Dreams and Echoes’, invited us to “explore the potential of immersive media in interrogating consciousness and enabling new interpretations of reality.” On the programme we undertook tech workshops, conceptual dialogue and collaborated with neuroscientists to develop a prototype with another member of the artistic cohort to be presented at an industry event at the Cheng Kar Shun Digital Hub – Jesus College Oxford during the Oxford is Extraordinary season of Consciousness.

“Have you ever felt a dream slip into your day, like a shadow you just can’t shake?”

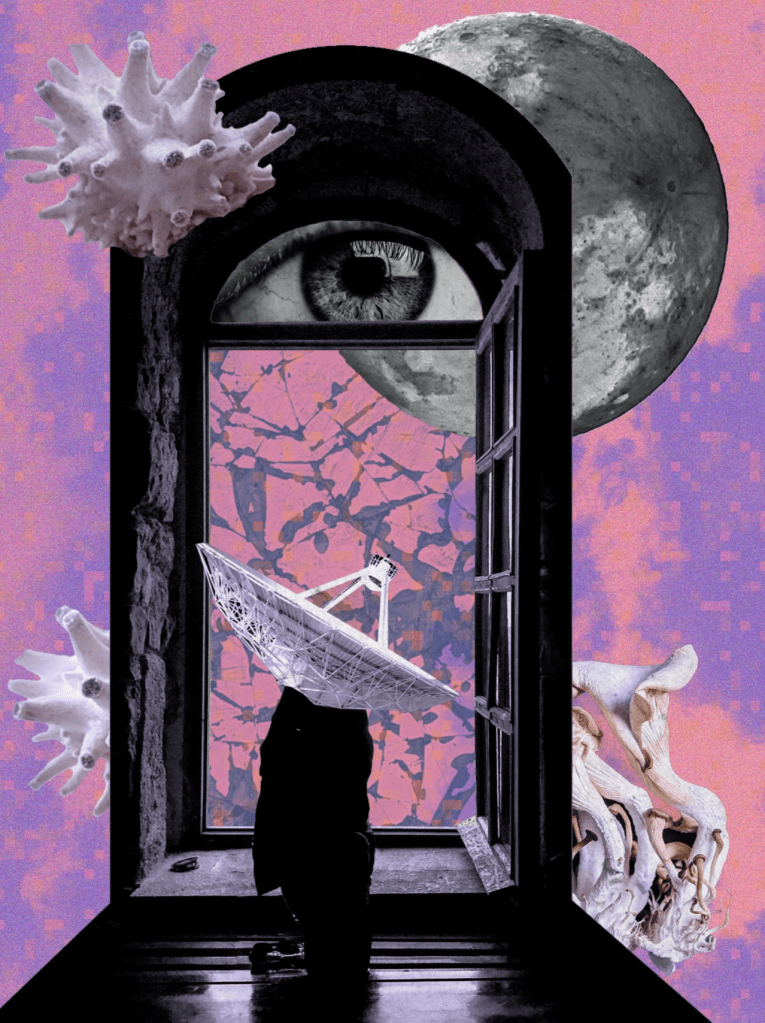

I collaborated with artist Michelle Collier on ‘To Lie Within Another’, an interactive installation exploring multi-layered consciousness, inspired by surrealist dream narratives, collage techniques and contemporary sleep science technological approaches.

APPROACH & TECHNOLOGY

We designed an interactive system using Touch Designer and Arduino, a gestural membrane interface, projection and layers of permeable gauze. The audience member pushed through a physical textile veil to reveal other layers of perception. These layers were animated digital photographic collages that appeared on screen, each layer morphing and blending the given fragmented state or reality. This computer system reflected the fragmented, transitory and fleeting and juxtaposed neurological patterns of association.

Michelle and I adopted surrealist techniques approached in our work (this was 2024, 100 years of Surrealism), keeping dream diaries and conducting sharing sessions in our own dream labs. These conversations informed our visual layers for the piece exploring memory, grief and our relationship to technology – as a mediating layer.

For our research was shaped by conversations with professors of Neuroscience Vladyslav Vyazovskiy and Russell Foster, exploring states of consciousness, notions of time and sensory physicality within dreaming.

PROTOTYPE

This was a stage one prototype, proof of concept. We envisage this piece on a larger scale working with more layers, sensory inputs and complex computational system.

IA4 is supported by the Cultural Programme at The Schwarzman Centre, University of Oxford, the Cheng Kar Shun Digital Hub at Jesus College Oxford, and Mediale’s talent development focus supported by Arts Council National Portfolio Organisation funding.

By Vicky Clarke and Kathy Hinde

Rose Garden Broadcast amplifies and transmits the hidden elemental sounds below and above the surface of the Rose Garden and the surrounding environment of Mesnes Park Wigan. It is a new collaboration by artists Vicky Clarke and Kathy Hinde for Light Night Wigan 2024, commissioned by Things That Go On Things co-created with young people from Wigan.

Rose Garden Broadcast invites audiences to wear wireless headphones and tune in to three different ‘earth to sky’ frequency bands broadcast from electronic, illuminated radio sculptures housed in Victorian glass cloches. Though dormant in winter, Rose Garden Broadcast will ‘bring back the blooms’ as digital twins. The cloches will house LED screens displaying pixel art roses, digital doppelgangers of the rose species as created by local young people from Wigan.

LATENT SPACES is a work in progress for 2025 | Comprising an EP release in April, and a spatial sound installation in October.

LATENT SPACES explores our perceptions of sonic, computational and cerebral spaces, considering what happens to the materiality of sound in the latent space of a neural network model. In these hidden spaces where statistics meets alchemy, and sound becomes data, we imagine different symbolic realms of utopia, fear and technological myth. LATENT SPACES invites

the listener to enter a computational model and experience a materiality in flux.

Combining machine learning and musique concréte, sound sculpture and electronics, the project begins with the AURA MACHINE EP on LOL Editions release in April, and ends with the LATENT SPACES spatial sound installation in October 2025.

The work is created using custom concrète datasets of post-industrial field recordings, comprising echoes of industrial millscapes and material eras of electricity, glass and metal, trained on PRiSM SampleRNN neural synthesis model. It builds on her artistic research since 2019 into machine learning & musique concréte as resident artist with NOVARS, University of Manchester in collaboration with PRiSM, Royal Northern College of Music, and ‘Neural Materials’, a Cyborg Soloists commission.

Latent: a state present but not yet manifest; hidden but not apparent.

LATENT SPACES is created by sound artist Vicky Clarke aka

SONAMB. This work is made possible by Sound and Music’s In Motion programme. In Motion is supported by Arts Coun-

cil England, Jerwood Foundation and Garrick Club Charitable Trust. With exhibition support from FutureEverything, as part

of the Innovate UK-funded Cultural Accelerator programme

Keep up to date:

From Sept 2024 – Feb 2025, I have been one of the R&D artists selected for the Cultural Accelerator programme, a new collaborative project between Future Everything, MediaCity Immersive Technologies Innovation Hub (MITIH) and Dream Lab, University of Salford to support artists developing work in immersive technologies.

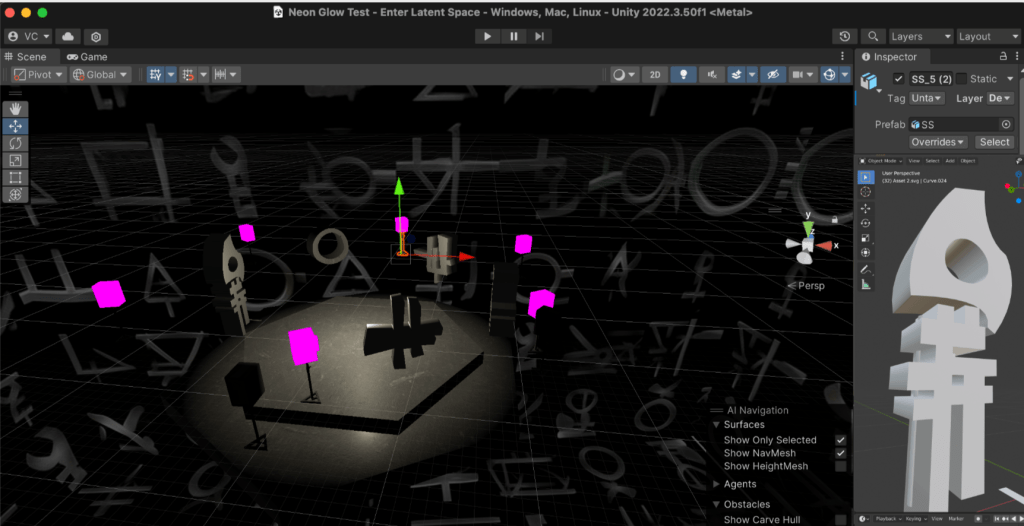

‘Enter Latent Space’ is a WIP audio visual project inviting audiences to step inside and experience a computational ‘latent’ space, opening up the ‘black box of AI’. This artwork will manifest as

[V2] a virtual prototype built in a games engine and experienced via a VR headset

[V1] a physical spatial sound installation, incorporating sound sculpture, neural synthesis, electronics and 6:1 speaker array

On the programme I have been working on the [V2], learning skills working in Unity games engine to translate my 3d sculptures and build a 360 computational environment. I am experimenting with using Unity as a prototyping environment for a physical spatial sound installation. The idea being to create a 3d visual world to experiment with audience navigation and experience digitally, and test different aesthetics in preparation for the real world installation. Both prototypes will be shared publicly in October 2025 in an exhibition at Seesaw space.

Read about the other artists here: https://futureeverything.org/portfolio/entry/cultural-accelerator/

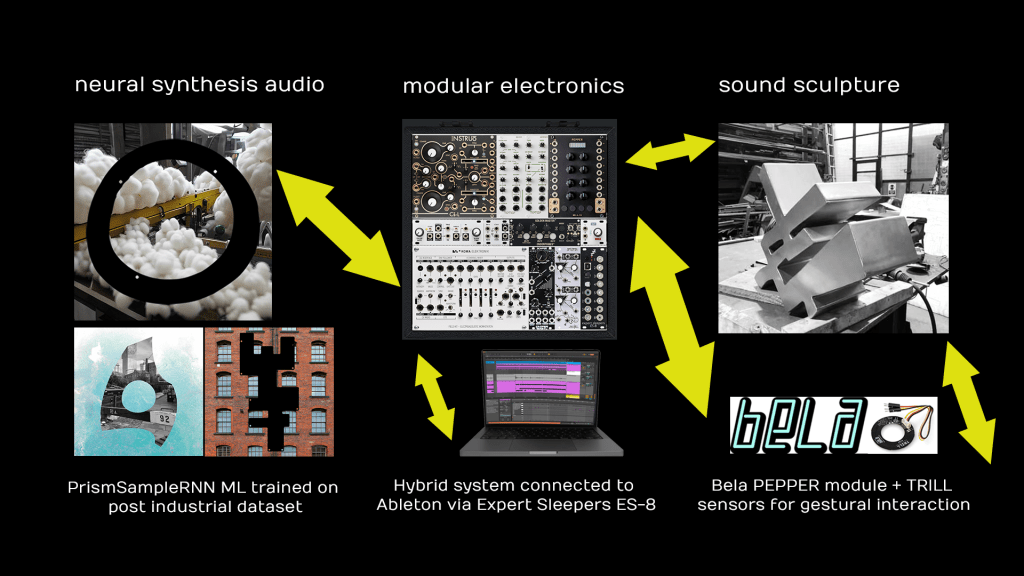

NEURAL MATERIALS is a new live AV show created by SONAMB, in collaboration with Sean Clarke on visuals. The piece debuted at Modern Art Oxford in spring 2024 and has been subsequently performed at the Creative Machines symposium at the Royal Holloway University. The piece is the result of a two year R&D commission by Cyborg Soloists, a UKRI funded project at Royal Holloway University led by Dr Zubin Kanga. Cyborg Soloists is a 4 year programme partnering artists and digital technologies to create new work. I worked with Bela technologies who created the Bela board, an ultra low latency sonic microcontroller for musicians and sound artists to create interactive artworks. I used their Pepper module as part of my Modular set up, along with their Trill sensors for gestural interaction.

The project is a further exploration of Machine Learning and Musique Concreté, building upon my 2021 residency with NOVARS, University of Manchester (in collaboration with PRiSM, RNCM) where I developed methodologies for building Musique Concreté training datasets for neural synthesis training.

For NEURAL MATERIALS I created a new performance system for AI, modular electronics and sound sculpture; a hybrid setup, with Ableton linking to the modular. With the Bela technology I used their PEPPER module for sample manipulation, controlled via their gestural sensors called TRILL. The aim was to be more hardware focused for live, and explore the ML materials more tangibly through gesture and feedback. To develop sound content for the project I undertook a series of studio experiments and workflows.

My sonic dataset for this project is ‘post industrial’ with each data class containing field recordings that represent a different material that tells the story of Manchester’s industrial past and present. Beginning on the outskirts of the city with cotton mill machinery at Quarry Bank Mill and journeying inwards towards the centre through the canal networks, we finish in the (property booming) city of luxury mill apartments and the shiny tower blocks of modern development and gentrification. This journey is represented by sonic data classes of cotton, water and noise.

Recording_ For the ‘Cotton’ class of field recordings, I was thrilled to record the machinery at Quarry Bank Mill. Built in 1784, it is one of the best preserved textile factories of the Industrial Revolution, now owned by the National Trust. I was privileged to gain access to the Cotton Processing Room and the Weaving Shed, to record the unique sounds of the machinery using a mixture of directional and stereo mics to record the looped rhythms, unique timbres and drones, and the power up and down of these amazing historical artefacts including the Draw Frame, the Slub Roving machine, the Carding Machine, the Ring Spinning Frame and the Lancashire Loom. My Dad trained as an apprentice electrician here in the 70s, cycling from Hulme to Styal everyday, so it’s a special place for me.

Pattern Generating_ The dataset was used to train the PrismSampleRNN model. Post training I was interested in what ‘future rhythms’ the ML model would generate. Through feature extraction would rhythms collide and be sonically interesting or would they replicate the staccato analogue loops in the dataset? In the Ableton still below, I am analysing and comparing rhythmic patterns from the original machine field recordings with the exported ML audio post training. You can see the audio is classified by each epoch, meaning the amount of times the model has trained on the full set of data. You can also see the ‘temperature’ setting which is a hyper-parameter of ML used to control the randomness of the predictions in latent space.

I enjoyed listening into the sounds seeing if I could identify particular machines from the dataset. Using a midi-drum conversion was the clearest way to listen back to the rhythmic material, which was eclectic and brilliant non-sense that a human drummer could not play, you can see this from the velocity hits in the roll at the bottom. Sending these pulses as CV triggers to the modular and looping the ML sections to locate areas I wanted to work with. In this audio clip you can hear the different generated machine rhythms falling in and out of each other.

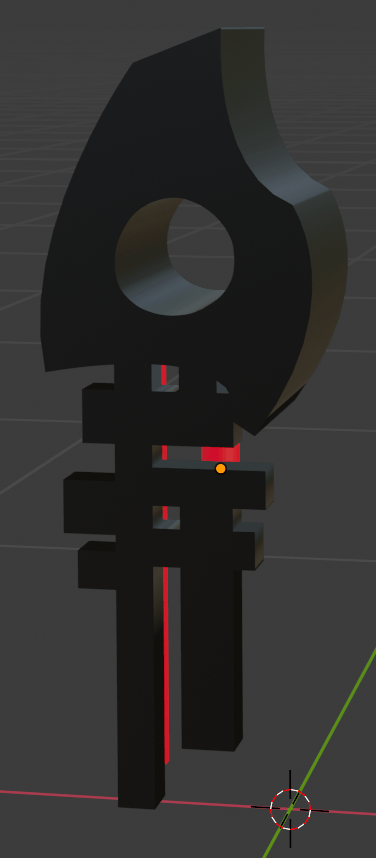

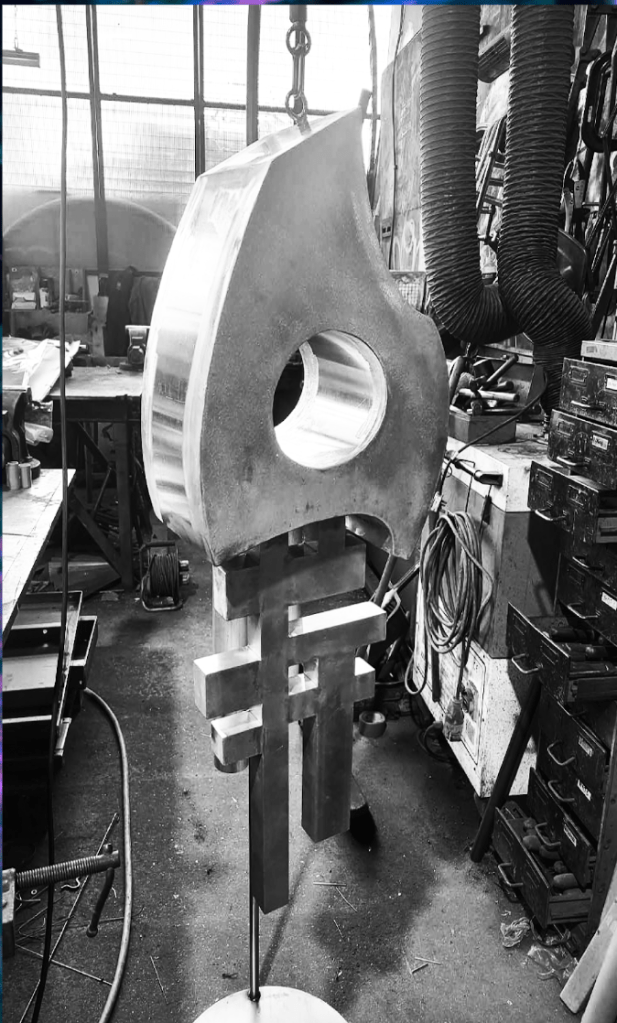

I’m working with a new metal sound sculpture, another in my series of AURA MACHINE icons ( a visual symbolic language i’ve developed to represent the sound object in latent space.) I’m interested in the acoustic potential of these metal sculptures and how these transmutational objects can be amplified, activated and fed back into the system for live performance. I mapped the frequency response of the physical sculpture at different points across it’s geometry using contact microphones positioned on each plane. There was a clear fundamental on each plane but also evident are harmonics and partials that recur throughout the object. Here is the 3D model with the mapped frequencies.

Using the Bela PEPPER module, we wrote a PureData patch that would control the playback and manipulation of the AI cotton mill samples via touch. We used two TRILL sensors to do this, the square one controlled the volume and speed on an XY axis, and the circle sensor controlling a granulated loop through touch. The sensors were fitted to my sculpture and used for live improvisation throughout the set. Another DIY aspect of the modular setup included working with my EMF circuit, which sampled and amplified the live electricity of the modular and fed it back into the system. We designed and laser cut three bespoke units; a breakout board for the TRILL sensors, a CV controlled light reactive unit and a holster for the EMF circuit. Special thanks to Chris Ball and Luke Dobbin for helping me with the coding on this!

I collaborated with digital artist Sean Clarke on this project, who expertly took my visual source materials and crafted them into a new audio reactive system for live performance using Touch Designer. I love working and performing with Sean, his artwork has so much feel and energy and he conveyed the sense of the materials in the dataset and the moods and changes I was trying to evoke.

I am still experimenting with this new performance system, the shows so far were the first outings for the piece and I plan to keep experimenting with the sculpture (especially acoustically) and develop the improvisational aspects for any new NEURAL MATERIALS shows. In 2025 I plan to develop my practice into the realm of spatial installation drawing on my methodologies and research from the past 5 years in sonic AI. More to come!

Cyborg Soloists, University of Holloway: Zubin Kanga, Caitlin Rowley, Mark Dyer, Jonathan Packham

Sean Clarke: Live Visuals

Chris Ball & Luke Dobbin: PureData coding support

Nik Void : Music Mentor

Quarry Bank Mill: Suzanne Kellett and team!

PRiSM: RNCM for neural network training

Flux Metal & Neon Creations: Sculptural Fabrication

Dan Hulme, EMPRES, University of Oxford

NEURAL MATERIALS is dedicated to my inspirational Dad, Vince Clarke who passed away July 2024.

SLEEPSTATES.NET is a browser-based work where the user navigates to different states of algorithmic slumber, a sleep-cycle of audiovisual interactions that takeover their screen. Exploring themes of machine addiction and autonomy, sleep sanctity, the networked condition and how citizens are subjected to emotion extraction via platform capitalism, these dream transmissions journey through the techno-emotional states of slumber we experience between humans and machines late at night. This piece accompanies SLEEPSTATES, the debut album from SONAMB.

Users experience AV screen-takeovers, interactive pop-up provocations and monitor their slumber status and machine addiction levels. They are asked to join SONAMB in defending sleep by ‘transmitting their frequency’ and breathing into the machine via an audio reactive noise-field, contributing to a collective citizen-white-noise-sleep-aid.

SLEEPSTATES.NET began in lockdown through late night internet explorations learning web coding and visual machine learning whilst experiencing sleep deprivation and questioning my mental health in relation to the machine. Documenting techno-emotional states of anxiety, addiction and inertia through audiovisual vignettes and code sketches, I sought to create a free space to commune collectively with the machine, an alternative to interfaces of power, data extraction and capitalism.

The piece takes inspiration from 24/7, Jonathan Crary (sleep=the last defence against capitalism) and MIT’s Dream Lab( sleep sound implantation). As a child of the 80s/90s, I grew up surrounded by computers as my Dad was a programmer. I was too young to take part in early utopian net communities but I do remember the aesthetic like a dream.

SUPPORT:The piece received R&D support via a remote residency for Manchester International Festival during lockdown, followed by creative web design mentoring with Studio Treble supported by Arts Council England.

PUBLIC SHOWINGS: The original SLEEPSTATES music video appeared at MUTEK Distant Arcades exhibition and online for Amplify festival at Somerset House. The artwork was shown at the 2023 NFT Biennale as part of the Sound Obsessed New City archive.

The AURA MACHINE research website is now live. The platform collates the research and creative outputs from my artist residency over the past two years with NOVARS, centre for innovation in sound at the University of Manchester, where I have been exploring the collision of musique concrete and machine learning. The residency was supported by European Arts Science Technology Network for Digital Creativity and in collaboration with PRiSM Lab (Practice Research in Science and Music) at the Royal Northern College of Music and was kindly supported by Arts Council England.

Framed by my research question “how can concrete materials and neural networks project future sonic realities?” , the site sets out the critical rationale for exploration, could machine learning, specifically sample based neural synthesis, be a tool for contemporary musique concrete?

Throughout the research I developed skills in building sonic concrete datasets, technical knowledge of neural synthesis and methodologies for listening, composition and performance when working with these materials. The musical output was in the form of my piece AURA MACHINE, whose first iteration was a 10 minute AV piece showcased at FutureMusic#3 event at RNCM.

‘What happens to the sound object when proessed by a neural network?

AURA MACHINE

‘A sound object has an AURA, can a machine generate an AURA?

The piece took the listener on a journey through training a neural network, starting with the raw concrete materials (representing the sonic dataset), through to transmutation (model training) to the purely generated AI materials. Conceptually the piece played with both Pierre Schaffer’s idea on the sound objects and Walter Benjamin’s ‘The work of art in the age of mechanical reproduction’ with the idea of a piece of art being authentic to a time and place’. This AV piece was further developed as a live piece into a 20 minute live performance with sound sculpture, audio reactive visuals (collab with Sean Clarke) and performances at Manchester Science Museum and a lead feature for PatchNotes, Factmag.

The residency was also supported by Arts Council England, this meant I could also develop new systems for algorithmic sound sculpture and circuit designs combining the creation of an ancient alchemical dataset (I found many parallels between alchemy and modern day ML) for visual generation and contemporary prototyping tools including 3d geometric design with Blender and 3D printed models before professional fabrication.

One aim of the residency was to demystify machine learning, break out of the hidden black box and through examining both the inherent barriers and the current accessible tools, share insights and approaches for other artists to create with sonic AI. I created ‘DREAMING WITH MACHINES’ an educational project for young women with Brighter Sound to support others in using these tools and techniques. We looked at the technical history of AI, inherent bias in datasets, the positive aspects of the turn of creative AI and its potential to imagine future societies and realities. We discussed our relationship with machines and the participants created their own datasets and audio visual pieces which premiered online as ‘dream transmissions’ and were showcased at the Unsupervised#3 event at University of Manchester.

>>dream transmissions from the electrical imaginary>>

SLEEPSTATES is the debut release by SONAMB, the new music project from Manchester sound artist Vicky Clarke, released November 2022. Exploring machine addiction, broken radio transmissions and algorithmic sleep territories, the record takes the listener through a cycle of dream transmissions from the electrical imaginary; the techno – emotional states of slumber we experience between humans and machines late at night.

‘a glitchy experimental techno jerker’

Boomkat review

Created over the past three years via networked locations, sonic states combine transmission recordings between Manchester, Berlin and St Petersburg and lockdown internet noise experiments, bringing together her processes of sound creation with DIY electronics, sound sculpture, musique concrete and machine learning. The record explores the sonic materiality of our technologies, the sanctity of sleep and ideas of autonomy and control as we meld into our machines, blurring conscious boundaries of our waking and networked selves.

Reception: The album was described as a ‘glitchy experimental techno jerker’ by Boomkat and ‘a stunning collection‘ receiving a full page feature in Electronic Sound magazine >>Read both reviews here >> Sleepstates was featured on Afrodeutsche’s The People’s Party show on BBC 6 Music, as SONAMB took on the unmixable challenge and played album track Sync Whole.>>Listen to feature>>. Album tracks also received airplay on NTS, Resonance FM, Camp Radio and Repeater radio.

SLEEPSTATES.NET: The release is accompanied by SLEEPSTATES.NET, a net-art piece featuring music from the record.

Credits: All tracks are written, produced and mixed by SONAMB, except track 7, mixed by Francine Perry and tracks 2 & 9, co-mixed by SONAMB and Francine Perry. SLEEPSTATES is mastered by Katie Tavini, Weird Jungle.

Listen: The album is self-released on Control Data Records, and is available on tape cassette and digital download direct from Bandcamp and Boomkat.