>>>ENTER SLEEPSTATES.NET >>>

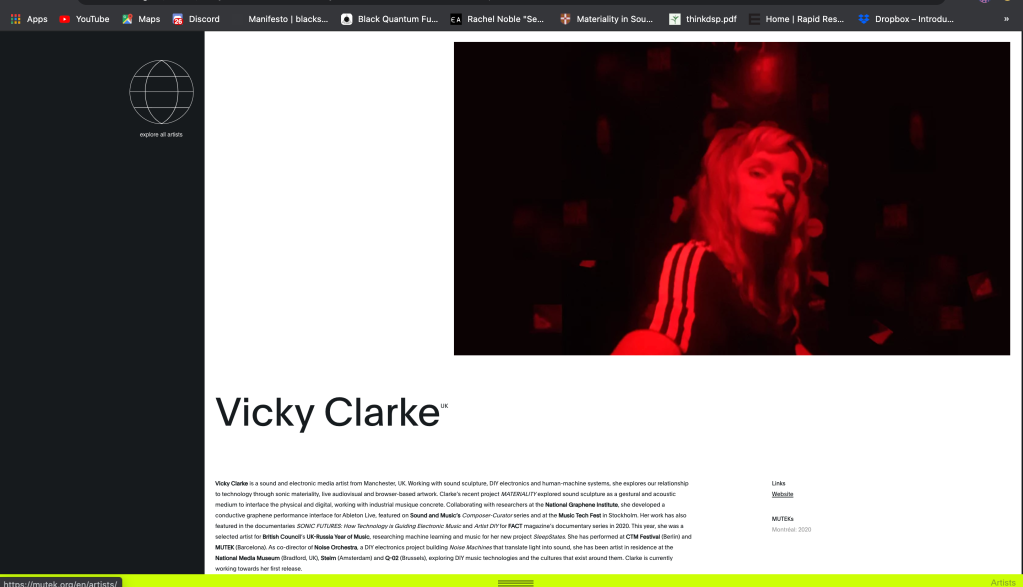

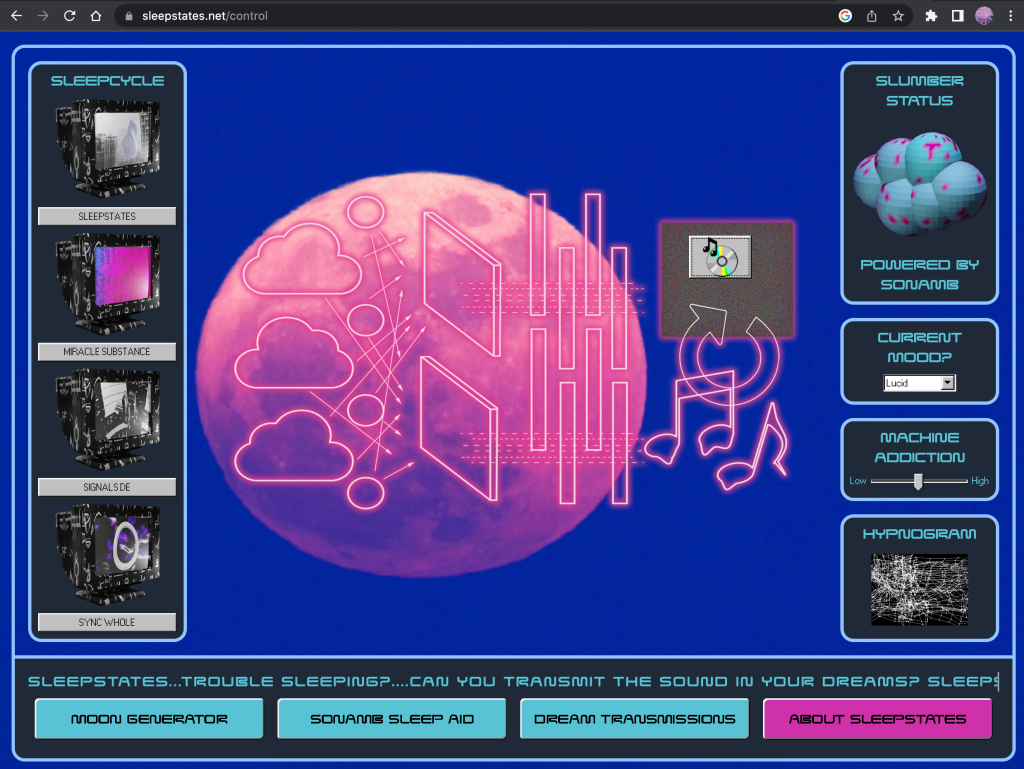

SLEEPSTATES.NET is a browser-based work where the user navigates to different states of algorithmic slumber, a sleep-cycle of audiovisual interactions that takeover their screen. Exploring themes of machine addiction and autonomy, sleep sanctity, the networked condition and how citizens are subjected to emotion extraction via platform capitalism, these dream transmissions journey through the techno-emotional states of slumber we experience between humans and machines late at night. This piece accompanies SLEEPSTATES, the debut album from SONAMB.

Users experience AV screen-takeovers, interactive pop-up provocations and monitor their slumber status and machine addiction levels. They are asked to join SONAMB in defending sleep by ‘transmitting their frequency’ and breathing into the machine via an audio reactive noise-field, contributing to a collective citizen-white-noise-sleep-aid.

SLEEPSTATES.NET began in lockdown through late night internet explorations learning web coding and visual machine learning whilst experiencing sleep deprivation and questioning my mental health in relation to the machine. Documenting techno-emotional states of anxiety, addiction and inertia through audiovisual vignettes and code sketches, I sought to create a free space to commune collectively with the machine, an alternative to interfaces of power, data extraction and capitalism.

The piece takes inspiration from 24/7, Jonathan Crary (sleep=the last defence against capitalism) and MIT’s Dream Lab( sleep sound implantation). As a child of the 80s/90s, I grew up surrounded by computers as my Dad was a programmer. I was too young to take part in early utopian net communities but I do remember the aesthetic like a dream.

SUPPORT:The piece received R&D support via a remote residency for Manchester International Festival during lockdown, followed by creative web design mentoring with Studio Treble supported by Arts Council England.

PUBLIC SHOWINGS: The original SLEEPSTATES music video appeared at MUTEK Distant Arcades exhibition and online for Amplify festival at Somerset House. The artwork was shown at the 2023 NFT Biennale as part of the Sound Obsessed New City archive.